DapuStor Haishen5 H5100 7.68TB Enterprise SSD Review - Random Read Champion

*This review is created by Tweaktown.

“

DapuStor is carving out a reputation for delivering the highest performance where it matters the most - exactly what we found in its Haishen5 Gen5 SSD.

Introduction and Drive Details

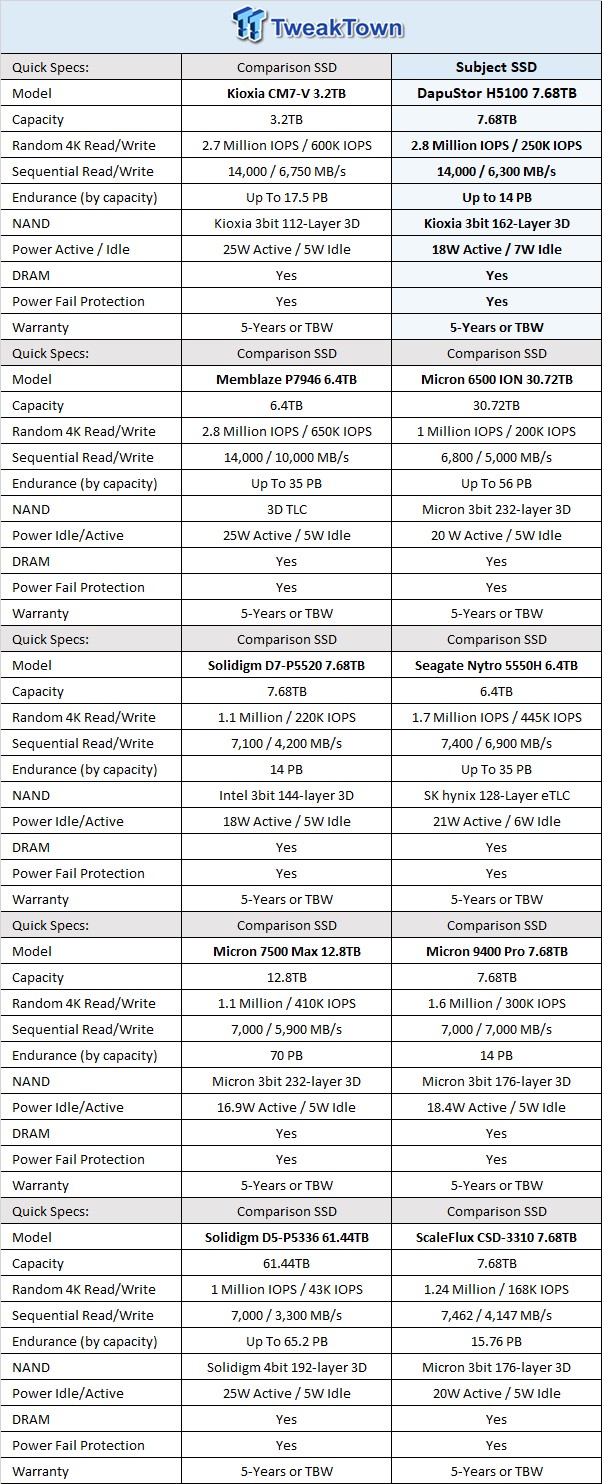

PCIe Gen5 SSDs are now making their way into datacenters across the globe. With double the potential throughput of PCIe Gen4, PCIe Gen5 SSDs are overall significantly more efficient than PCIe Gen4 as measured in IOPS per watt or IOPS to footprint. To date we've tested two PCIe Gen5 enterprise offerings, the first being KIOXIA's CM7-V a 3-DWPD, 2.8 million IOPS capable, mixed workload specialist, the second being Memblaze' P7946, also a 3-DWPD mixed workload specialist.

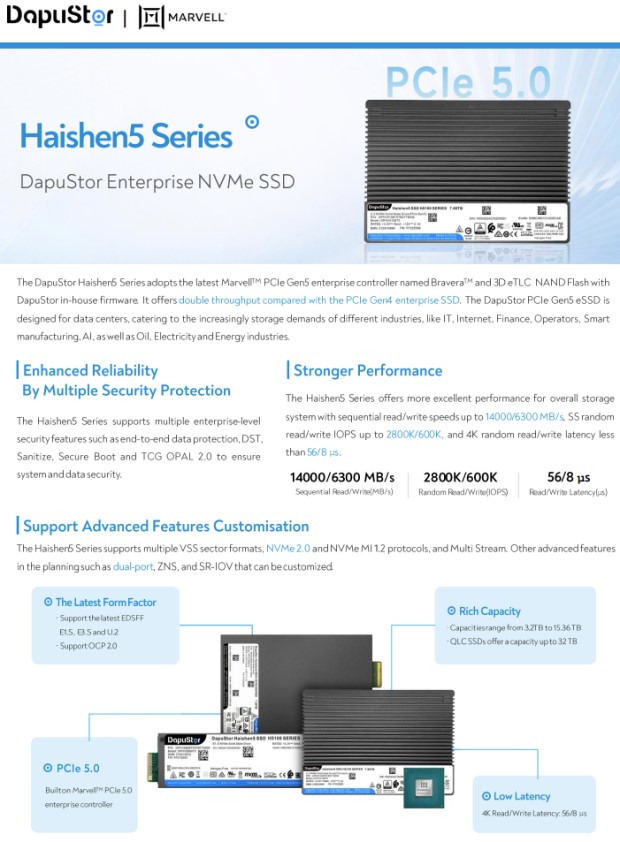

The subject of today's review is another PCIe Gen5 contender from the China-based enterprise SSD company DapuStor. DapuStor has already been well recognized as capable of delivering the highest performance in its class SSDs with its PCIe Gen4 lineup. Now we get a chance to see if DapuStor is doing PCIe Gen5 in a similar fashion.

Our test subject is not in all instances directly comparable to the two aforementioned PCIe Gen5 competitors in that it is a 1-DWPD SSD or a read-intensive specialist as opposed to the 3-DWPD mixed workload specialists. However, we can directly compare when we are looking at 100% read workloads where there is no advantage for 3-DWPD SSDs, but not directly when writes are all or part of the workload.

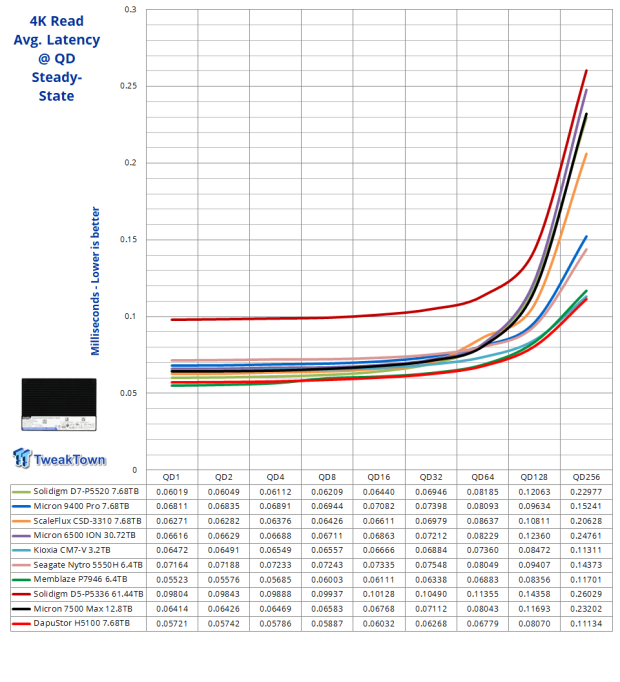

Judging from the title of this review, you have probably already ascertained that the DapuStor H5100 we are testing today delivers random read performance exceeding that of anything flash-based we've tested to date. Correct, it does. However, it doesn't deliver more in terms of the highest attainable; it does so where it matters most, where it is practically attainable. We are of course referring to low queue depth performance, or queue depths of 32 or less where 99% of all transactions are carried out. This is where performance matters the most. The lower the queue depth, the more it matters.

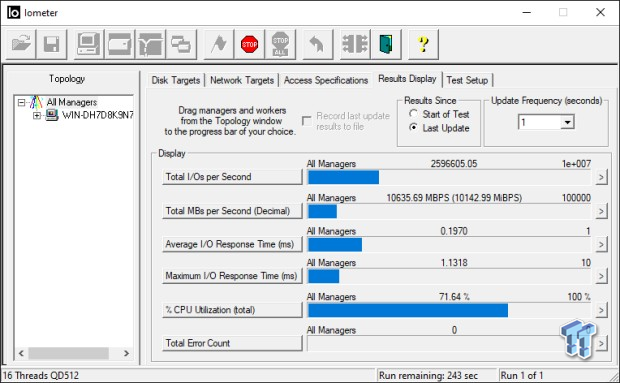

DapuStor has tuned its Gen5 SSD to deliver the goods where it matters most, and it does indeed deliver the most performance we've tested to date at low queue depths, which is why we have crowned it the "Random Read Champion". The H5100 7.68TB we have on the bench today is spec'd as capable of up to 2.8 million IOPS. We have no doubt that it can attain this speed under the right conditions. However, with our configuration, we topped out at 2.6 million IOPS:

This is 200K lower than we were able to extract from the CM7-V and Memblaze P7946. However, max random throughput is not practically attainable, so we need to instead focus on performance capabilities at attainable queue depths.

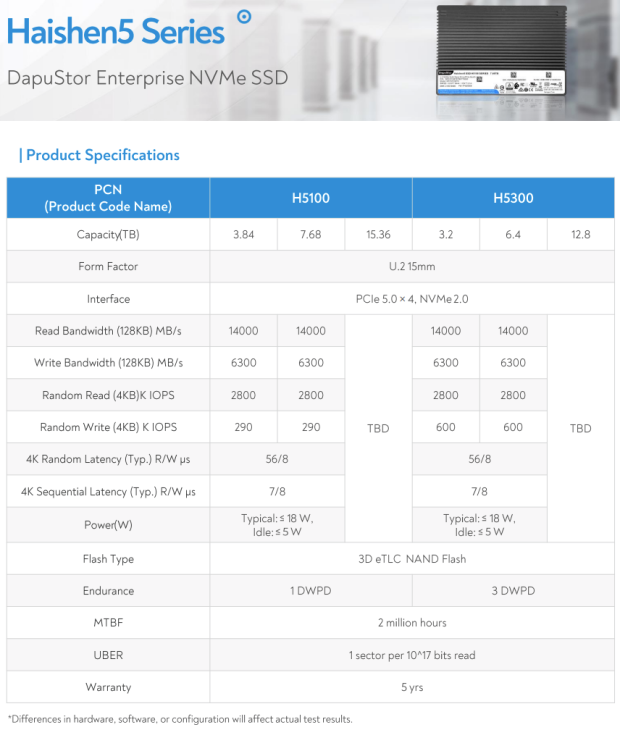

Specs/Comparison Products

DapuStor currently offers its Haishen5 series at capacity points ranging from 3.2TB to 15.36TB across three form factors, including 2.5-inch U.2, E1.S, and E3.S. The drive we have in hand is U.2, Marvell Bravera 16-channel controlled and arrayed with 162-layer Kioxia BiCS 6 flash. We note the drive is spec'ed at 18 watts active, which is very low compared with the 25 watts active we've come to expect from PCIe Gen5 SSDs. If true, this is remarkable indeed. We tend to believe it because the drive is by far the coolest running Gen5 SSD we've ever tested.

DapuStor Haishen5 H5100 7.68TB NVMe PCIe Gen5 x4 U.2 SSD

Enterprise Testing Methodology

TweakTown strictly adheres to industry-accepted Enterprise Solid State Storage testing procedures. Each test we perform repeats the same sequence of the following four steps:

1. Secure Erase SSD

2. Write the entire capacity of SSD a minimum of 2x with 128KB sequential write data, seamlessly transition to the next step

3. Precondition SSD at maximum QD measured (QD32 for SATA, QD256 for PCIe) with the test-specific workload for a sufficient amount of time to reach a constant steady-state, seamlessly transition to the next step

4. Run test-specific workload for 5 minutes at each measured Queue Depth, and record

Benchmarks - Random and Sequential

- 4K Random Write/Read

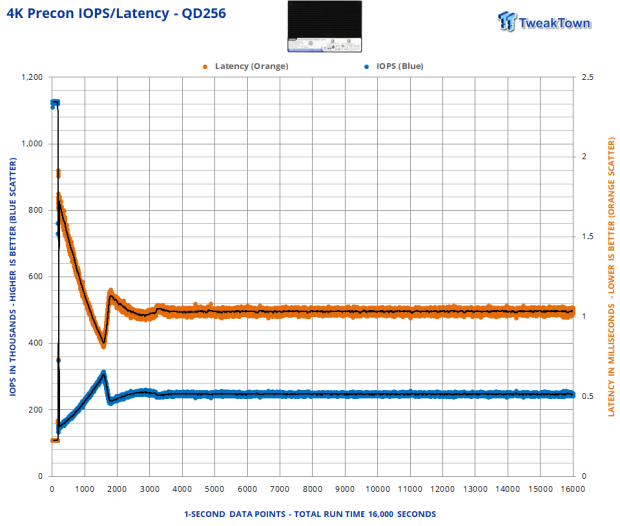

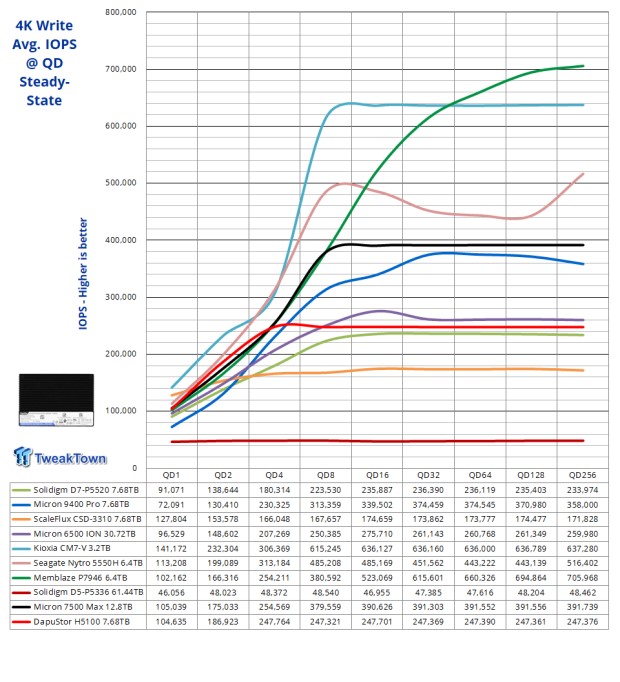

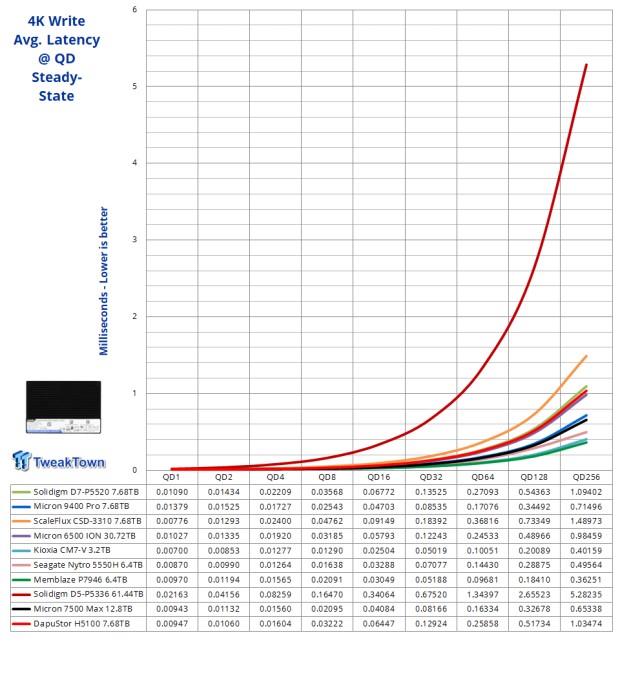

We precondition the drive for 16,000 seconds, receiving performance data every second. We plot this data to observe the test subject's descent into steady-state and to verify steady-state is in effect as we seamlessly transition into testing at queue depth. Steady state is achieved at 4,000 seconds of preconditioning. Average steady-state write performance at QD256 is approximately 247K IOPS.

A pure write workload is the nemesis of any read-intensive SSD so we aren't expecting a whole lot from a drive rated for up to 290K random write IOPS. We do however note that our test subject is delivering more at QD1 and QD2 than the PCIe Gen5 Memblaze P7946.

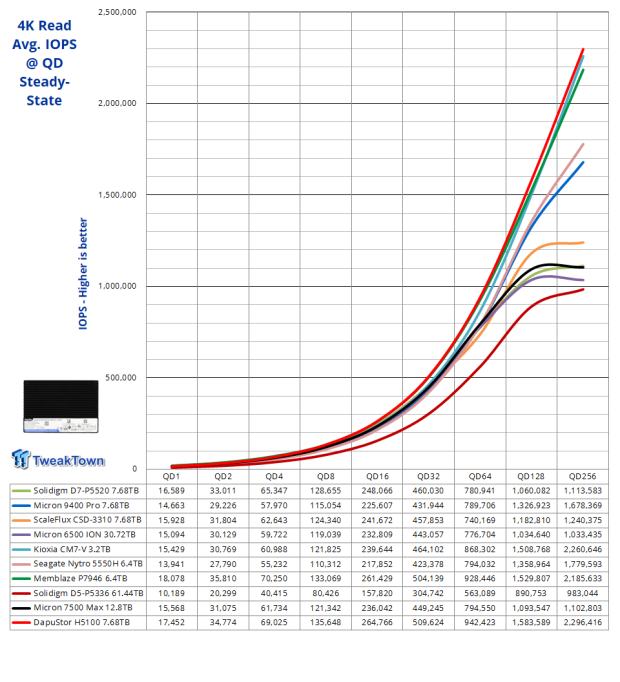

Overall, this is the best performance curve we've ever attained from any flash-based SSD. This is especially relevant because 4K random reads are arguably the most important performance metric, most of the time. Results here are why we are crowning the H5100 as "Random Read Champion". Impressive.

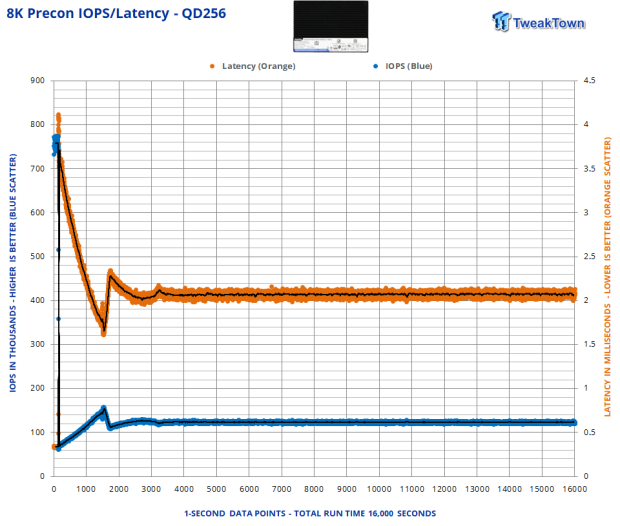

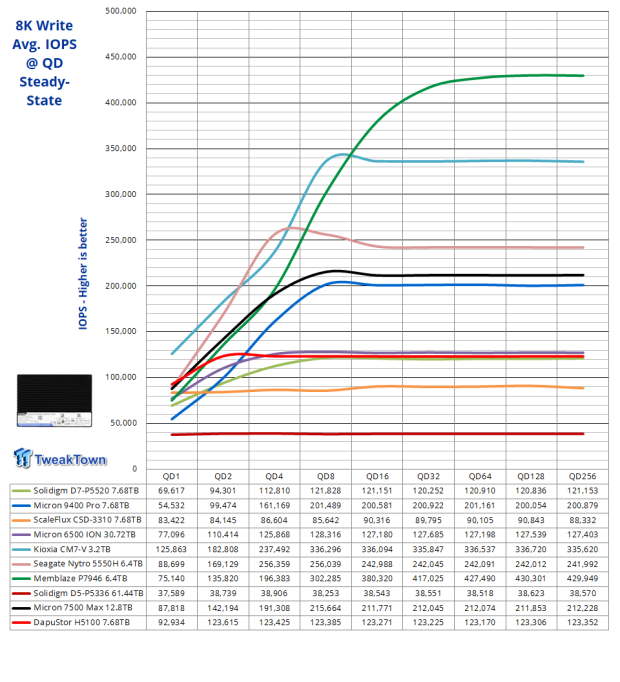

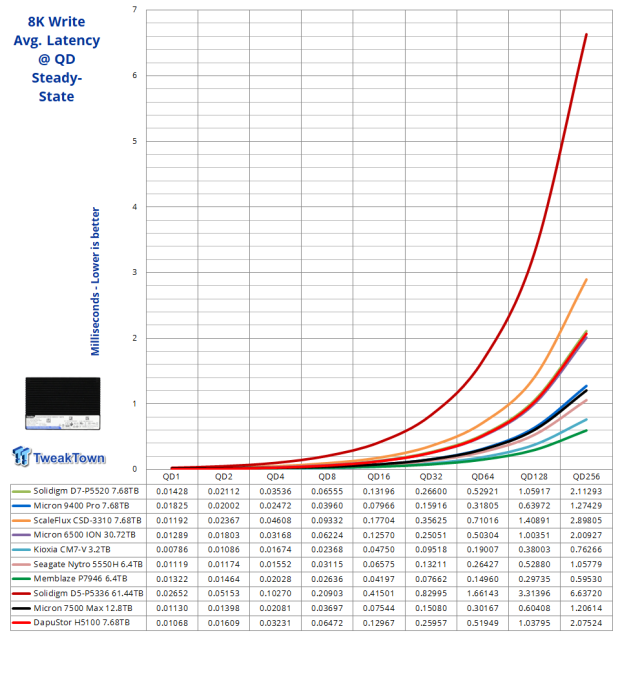

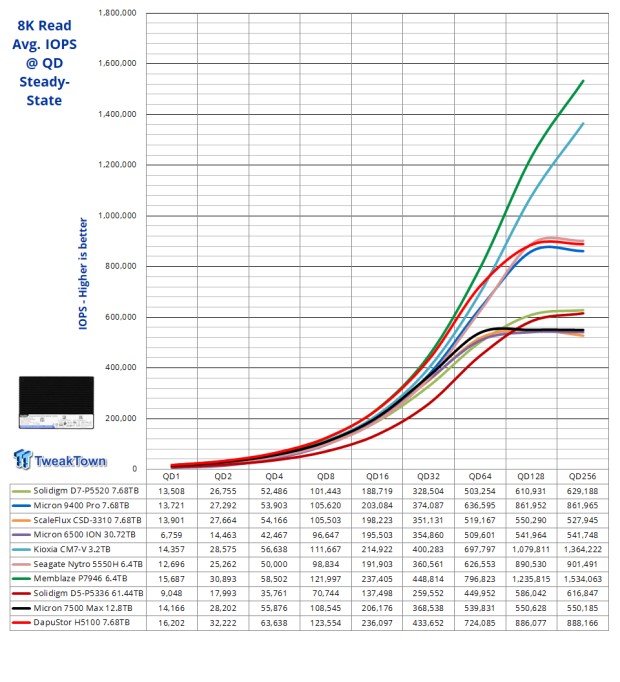

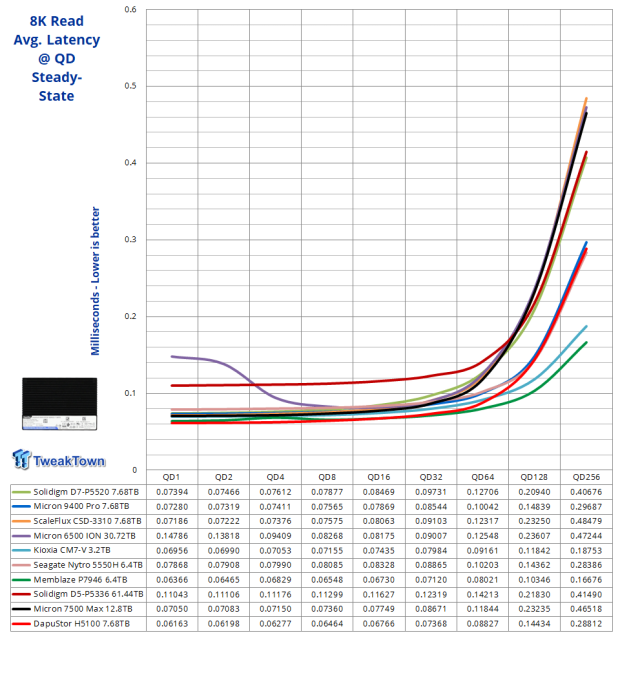

- 8K Random Write/Read

We precondition the drive for 16,000 seconds, receiving performance data every second. We plot this data to observe the test subject's descent into steady-state and to verify steady-state is in effect as we seamlessly transition into testing at queue depth. Steady state is achieved at 4,000 seconds of preconditioning. Average steady-state write performance at QD256 is approximately 123K IOPS.

We expect 8K random to track pretty much the same as 4K random here, just at a lower rate because it's moving twice the amount of data. Overall performance here is unremarkable, as expected for what the drive is rated for in terms of random write performance. However, we do note our test subject delivers the second best we've ever attained at QD1. Additionally, the drive reaches full speed at QD2 which is again impressive.

Again, we find our test subject delivering more than we've ever recorded from a flash-based SSD and doing so where it matters most, at low queue depths. At QD1-8 where most transactions take place, our test subject rules them all. Outstanding.

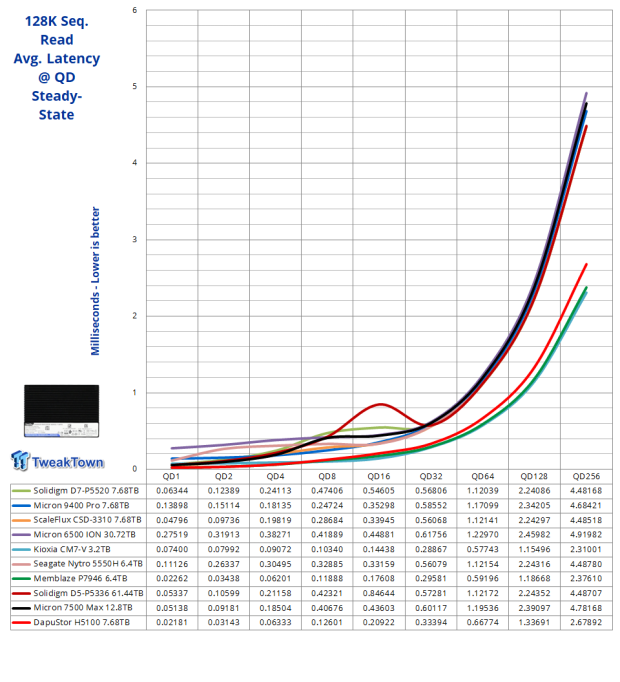

- 128K Sequential Write/Read

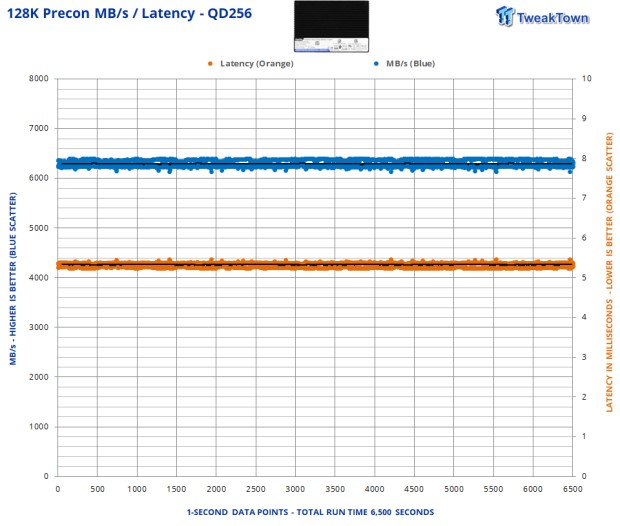

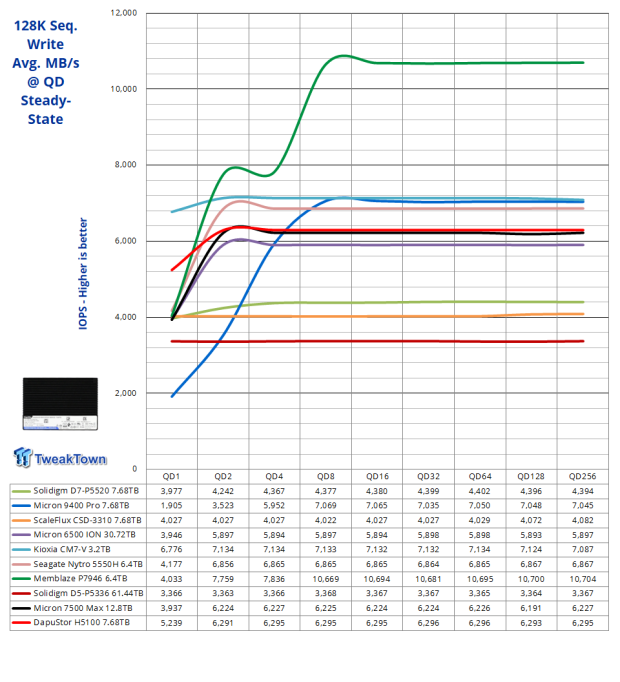

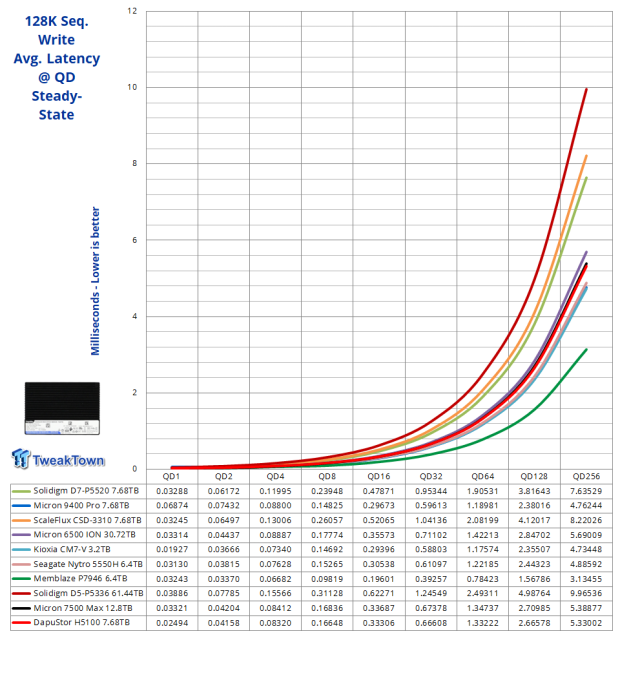

We precondition the drive for 6,500 seconds, receiving performance data every second. Steady state for this test kicks in at 0 seconds. Average steady-state sequential write performance at QD256 is approximately 6,295 MB/s.

Our test subject delivers the advertised 6,300 MB/s, which in and of itself is quite unremarkable, but we do note that at the all important QD1 it delivers the second best result we've ever recorded.

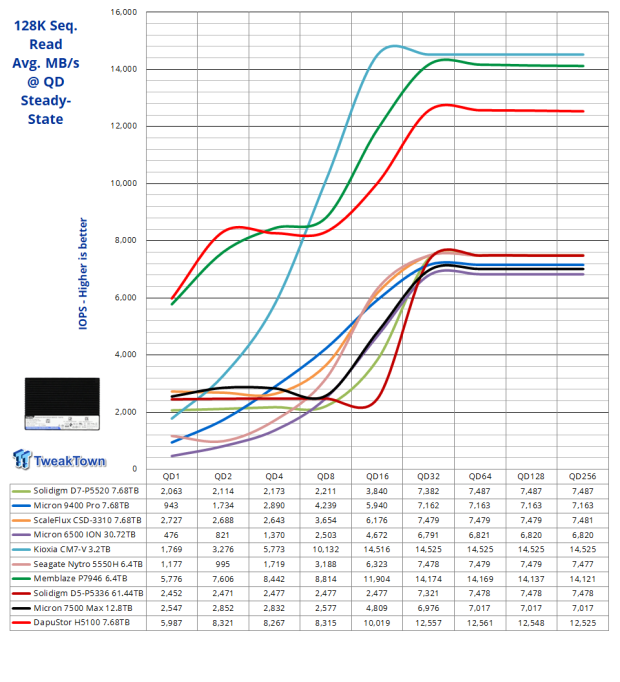

Although our test subject fails to deliver 14,000 MB/s, falling short by 1,450 MB/s, we are nonetheless impressed with its class-leading throughput at QD1-2. This is where performance matters most, most of the time.

Benchmarks - Workloads

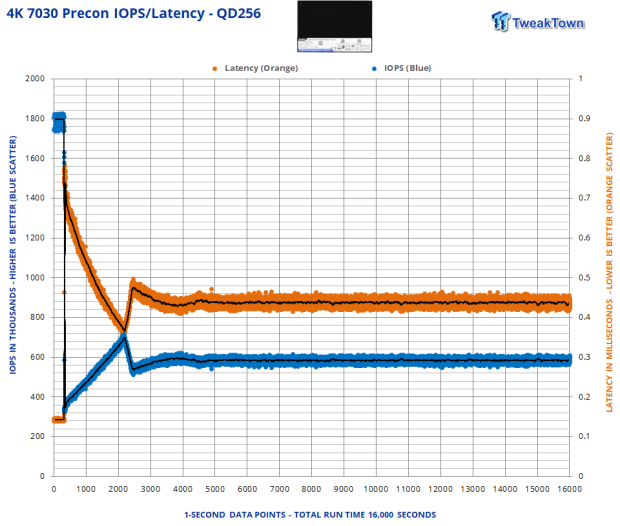

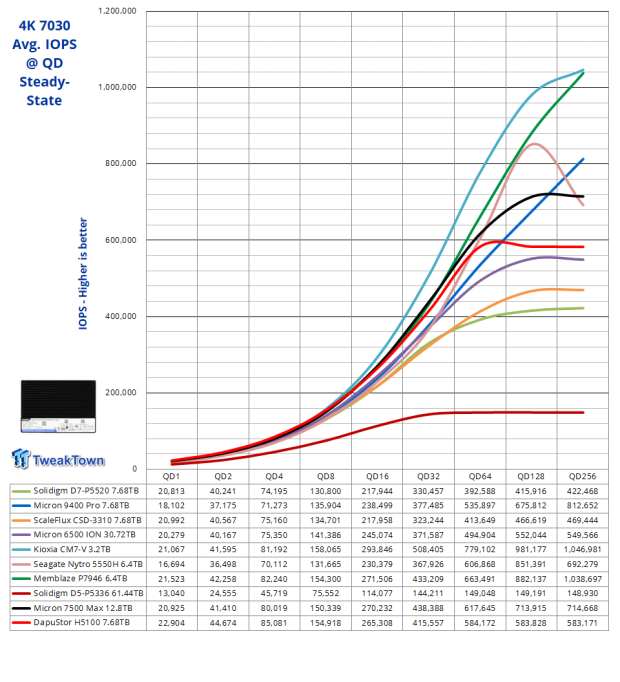

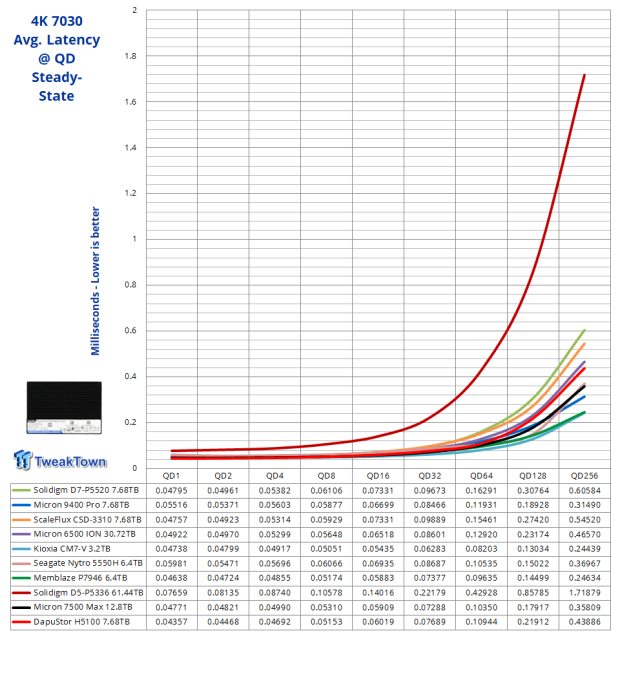

- 4K 7030

4K 7030 is a commonly quoted workload performance metric for Enterprise SSDs.

We precondition the drive for 16,000 seconds, receiving performance data every second. We plot this data to observe the test subject's descent into steady-state and to verify steady-state is in effect as we seamlessly transition into testing at queue depth. Steady state is achieved at 5,000 seconds of preconditioning. Average steady-state performance at QD256 is approximately 583K IOPS.

Considering 30% of this workload is write, we are surprised to see our test subject perform so well here. Yet again, the H5100 sets a new lab record where it matters most, QD1-4. Impressive.

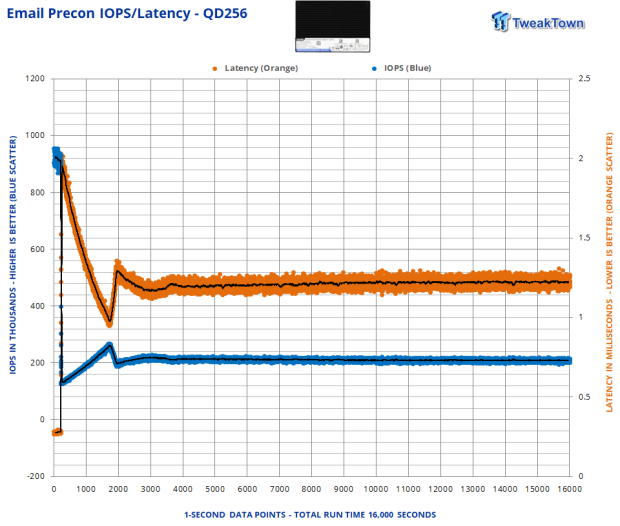

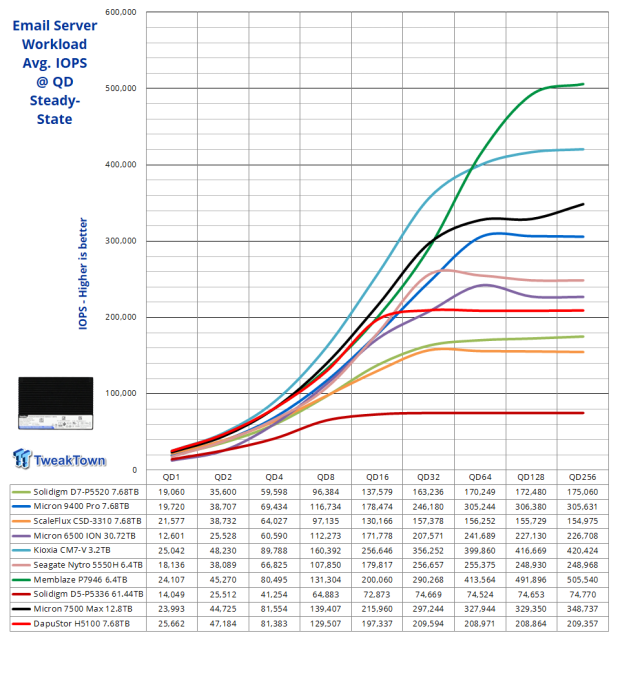

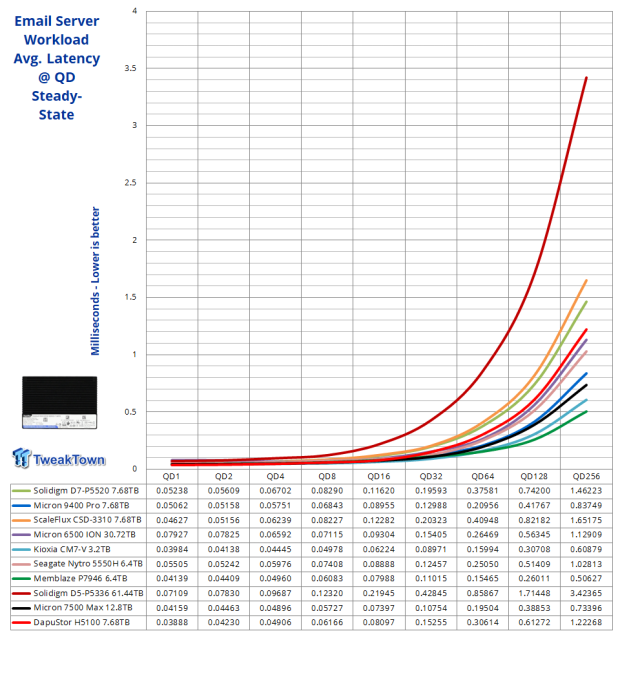

Email Server

Our Email Server workload is a demanding 8K test with a 50 percent R/W distribution. This application gives a good indication of how well a drive will perform in a write-heavy workload environment.

We precondition the drive for 16,000 seconds, receiving performance data every second. We plot this data to observe the test subject's descent into steady-state and to verify steady-state is in effect as we seamlessly transition into testing at queue depth. Steady state is achieved at 5,000 seconds of preconditioning. Average steady-state performance at QD256 is approximately 505K IOPS.

Another win at QD1. Remarkable really, considering this is a 50% write workload.

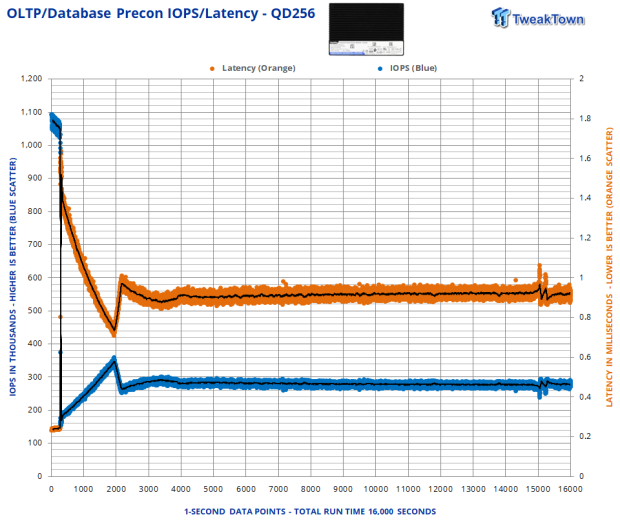

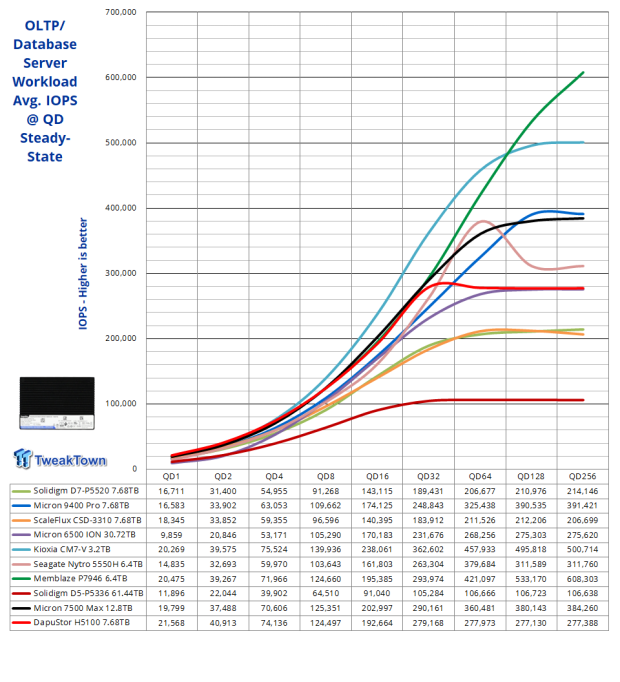

OLTP/Database Server

Our On-Line Transaction Processing (OLTP) / Database workload is a demanding 8K test with a 66/33 percent R/W distribution. OLTP is online processing of financial transactions and high-frequency trading.

We precondition the drive for 16,000 seconds, receiving performance data every second. We plot this data to observe the test subject's descent into steady-state and to verify steady-state is in effect as we seamlessly transition into testing at queue depth. Steady state is achieved at 5,000 seconds of preconditioning. Average steady-state performance at QD256 is approximately 277K IOPS.

This time our test subject delivers another lab record at QD1-2. At this point we are no longer surprised to see the H5100 dominating at low queue depths. This is what the drive is all about.

Web Server

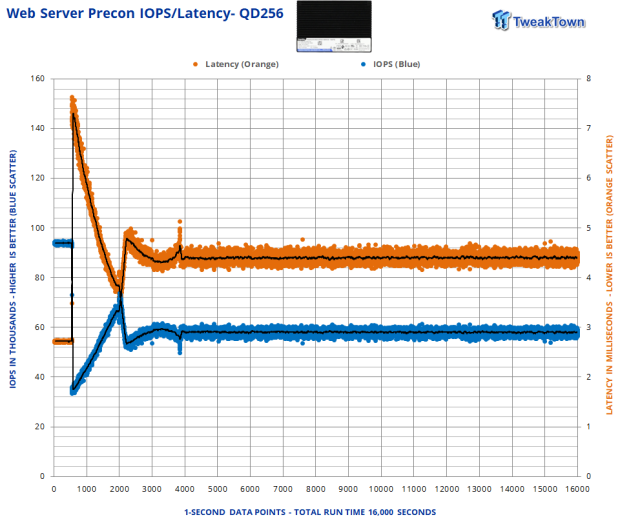

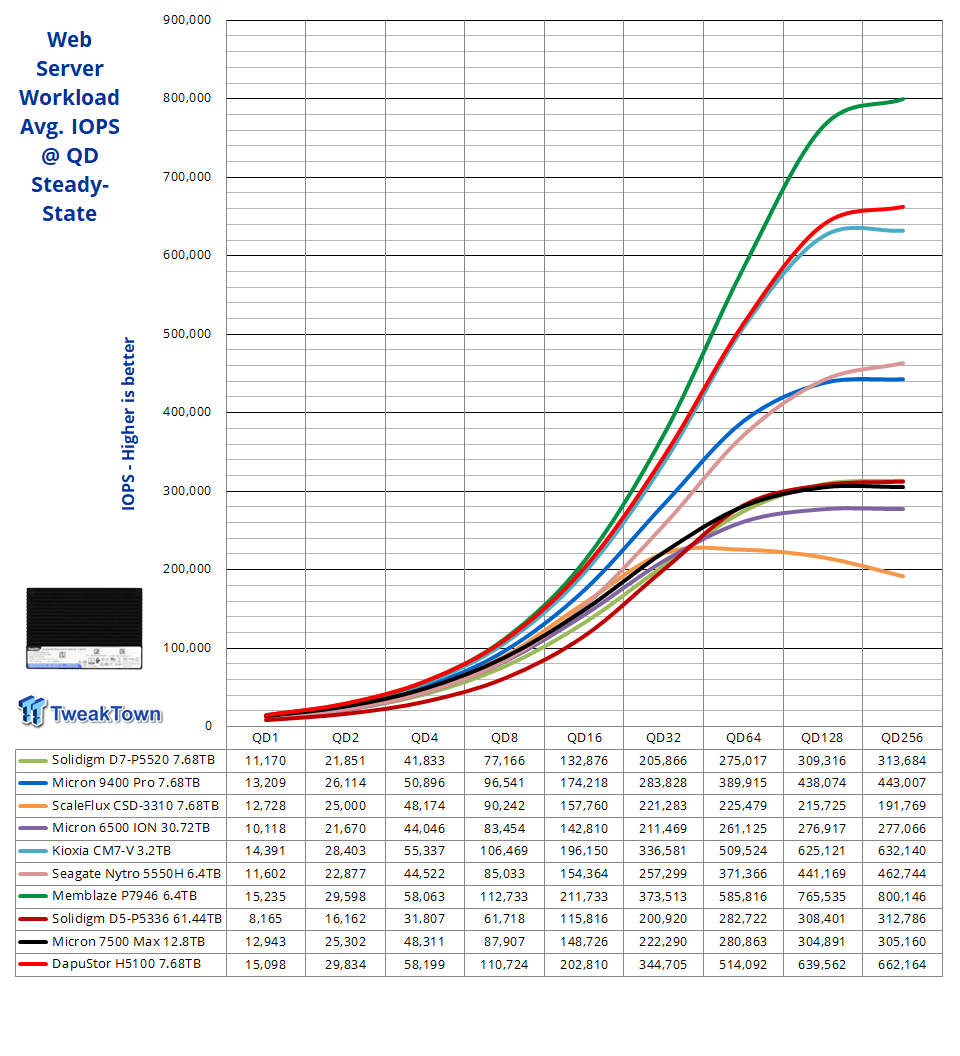

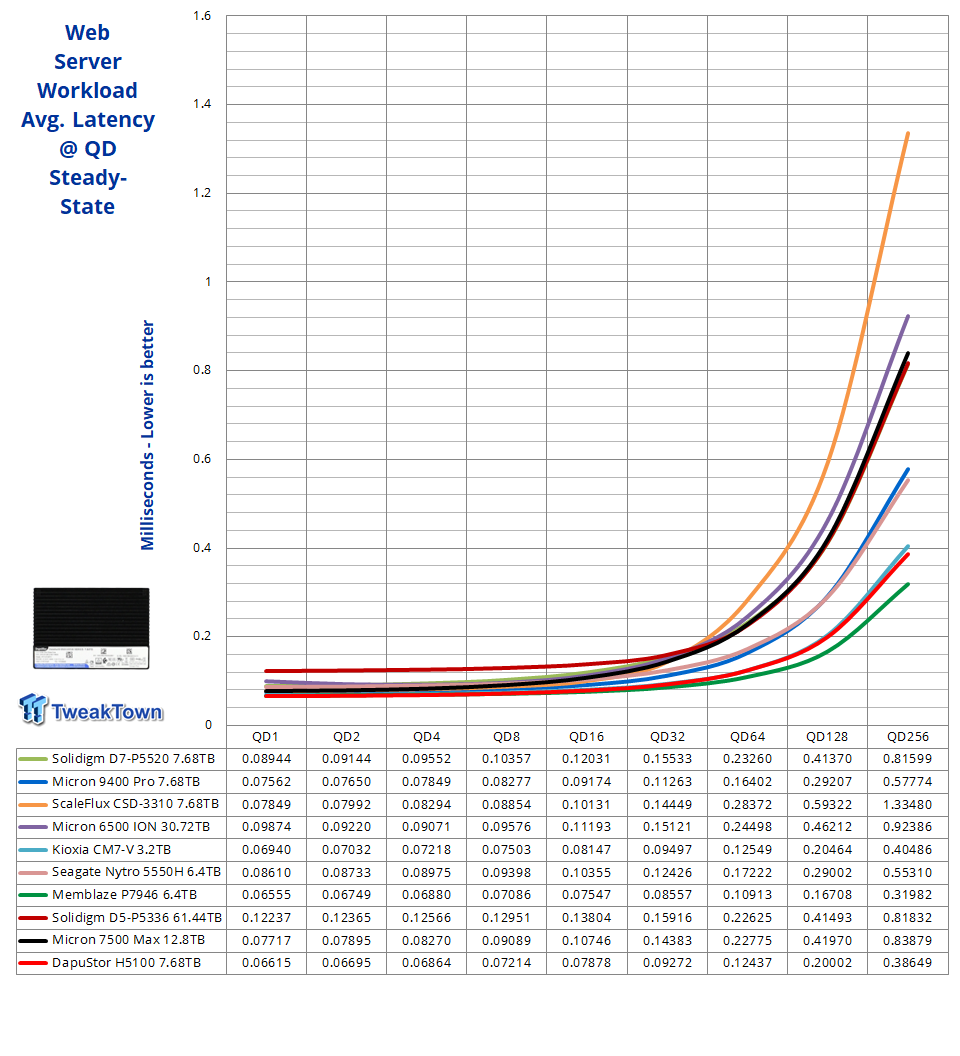

Our Web Server workload is a pure random read test with a wide range of file sizes, ranging from 512B to 512KB at varying percentage rates per file size.

We precondition the drive for 16,000 seconds, receiving performance data every second. We plot this data to observe the test subject's descent into steady-state and to verify steady-state is in effect as we seamlessly transition into testing at queue depth. We precondition for this test with an inverted (all-write) workload, so no relevant information can be gleaned from this preconditioning other than verification of steady state.

Aside from delivering what we consider overall the second-best performance curve we've witnessed to date from a flash-based SSD, our contender sets the bar at QD2-4. Impressive.

Final Thoughts

DapuStor's Haishen5 H5100 7.68TB SSD is somewhat of a different beast than we've experienced before. It is highly tuned for performance at low queue depths. Throughout our testing, the drive consistently delivered new lab bests for a flash-based SSD, where it arguably matters most, at queue depths up to 4.

Certainly, data shows that in the datacenter, just as it is in the consumer space, random reads at low queue depths are where performance matters most, most of the time. This is where the H5100 thrives. Even when mixed workloads are set before it, our 1-DWPD test subject still outperforms everything else, including our two 3-DWPD PCIe Gen5 competitors, more often than not at QD1-4.

Especially impressive is what the drive can do with pure 4K random reads, where it delivers the best overall performance curve we've witnessed to date. The H5100 also crushed our 100% random read Web Server test, where it delivered the second-best performance we've encountered to date, even beating the CM7-V. Additionally, if the mfg. spec sheet is to be believed, it is doing this at 18 watts active, which is significantly lower power consumption than any Gen5 SSD in its class that we know of.

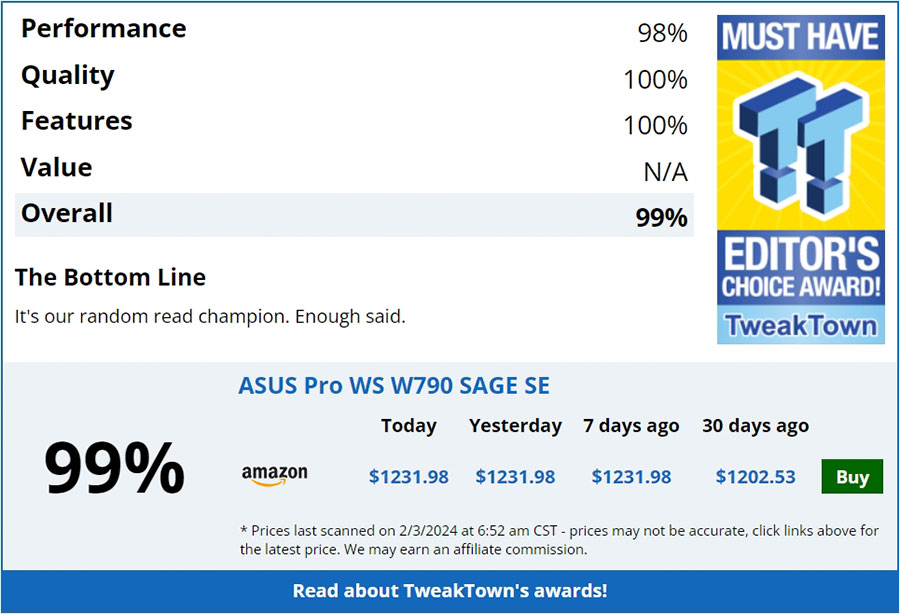

DapuStor's Haishen5 H5100 7.68TB is the random read champion, low queue depth mixed workload champion, and, as such, has earned our highest award. Editor's Choice.

"